Why rankings of institutions are not a great idea…

It is that time of the year again – when we hear about the rankings given to schools in the UK (which was discussed on Radio 4 Today’s programme this morning). While much time was given to the fact that they have “moved the goalposts” by changing the criteria on which the rankings are assessed, not much time was given to the old chestnut that the idea of ranking schools is just not a good idea as the ranks are too unstable to be meaningful.

Another ranking that is topical (in Higher Education circles at least) is those that will arise from the National Student Survey; BSc Mathematics in general have quite high satisfaction scores and relatively small class sizes, so relative rankings can change quite dramatically year on year with only random variation from the true underlying “satisfaction rating” of a degree programme.

I decided to simplify the problem and to simulate it to illustrate the major problem.

I took 16 different percentages (or the associated probabilities of success / satisfaction) – from 80 – 95%. For each of these values I modelled 5 sets of data, each representing 15 years of data, with the potential sample sizes (number of respondents) varying at random between 45 and 55. I then modelled the number of successful (or satisfied) students and hence the proportions – which depend on the corresponding sample sizes. These proportions were then turned into annual ranks – so in each of the 15 “years” an institution would have been ranked between 1 and 80 (with, for clarity, the lower ranks indicating better performance).

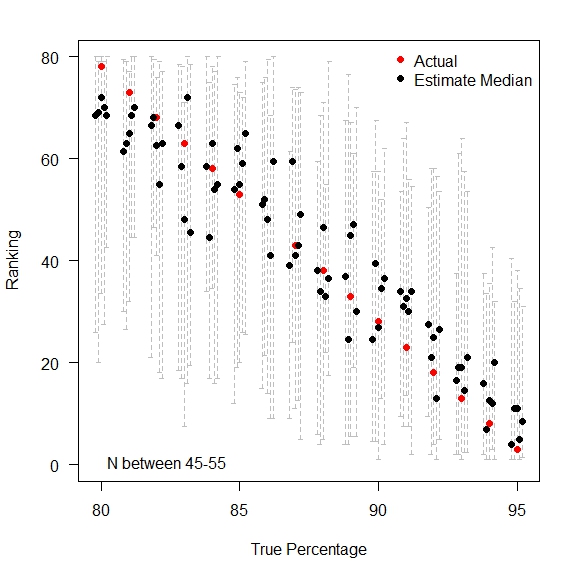

The graph illustrates the problem. The black dots indicate the median rank across the 15 “years” of data. The red dot represents the “true” ranking – located in the centre of each of the groups of institutions with tied true rankings. The grey dashed lines in the background show the span of values of the rankings given year-on-year. Many of these dashed lines span almost the entire range of possible rankings!

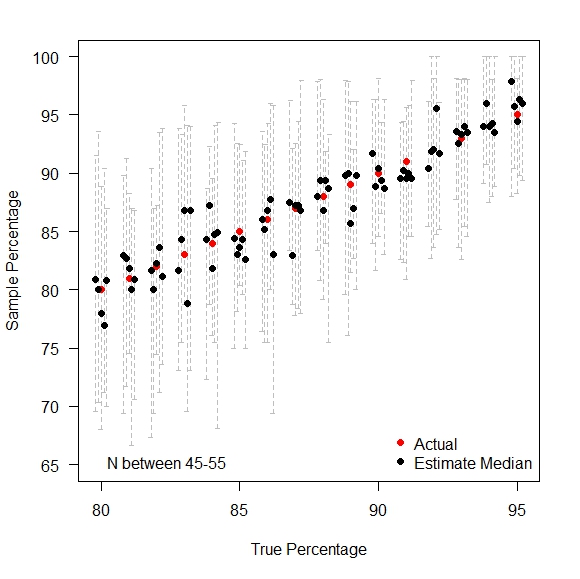

Moral of the story is, when comparing many institutions with very similar performances, ranks are meaningless in practice. If anything needs to be compared, compare the raw values (%) so that people can see how little difference there exists in practice – as you can see below, by treating each institution in isolation, the random variations over time become still exists but people can judge for themselves as to whether any observed difference is large enough to cause real concern (a jump of 3 percentage points may have a dramatic difference in the ranking position when everyone is tightly bunched together).

I chose the range 45-55 as it is a common range of the number of responses in the National Student Survey for BSc Mathematics degrees. Looking at Bristol data for Key Stage 2, and the 29 schools with a minimum of 85% achieving level 4 or above in reading, writing and maths at KS2, the numbers of eligible students in each of these schools range from 20 to 91, with a median of 45 students [so the same range is sensible].